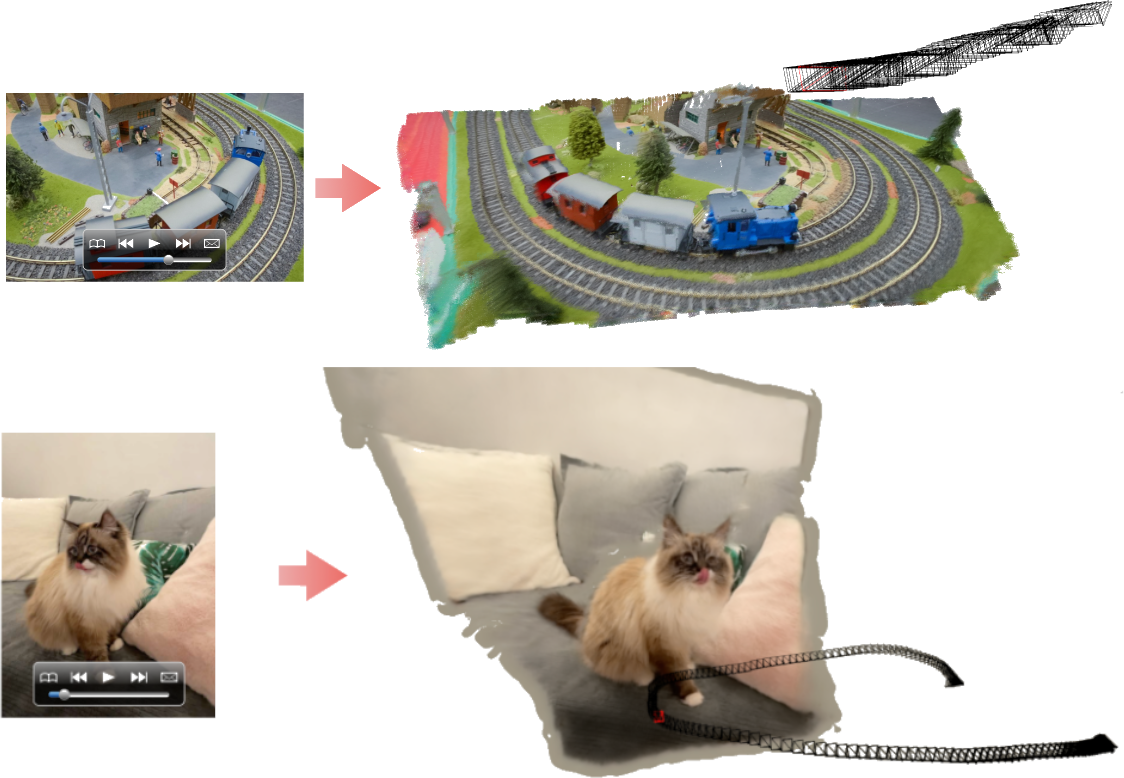

D-NPC: Dynamic Neural Point Clouds for Non-Rigid View Synthesis from Monocular Video

Eurographics (Computer Graphics Forum), 2025

Computer Graphics Lab

TU Braunschweig Moritz Kappel, Computer Graphics Lab

TU Braunschweig Florian Hahlbohm, Computer Graphics Lab

TU Braunschweig Timon Scholz, Computer Graphics Lab

TU Braunschweig Susana Castillo, Max Planck Institute for Informatics

Saarland Informatics Campus Christian Theobalt, Computer Graphics Lab

TU Braunschweig Martin Eisemann, Max Planck Institute for Informatics

Saarland Informatics Campus Vladislav Golyanik, Computer Graphics Lab

TU Braunschweig Marcus Magnor

D-NPC enables fast, non-rigid view synthesis from monocular video by expanding extending Implicit Neural Point Clouds (INPC) to the temporal domain.

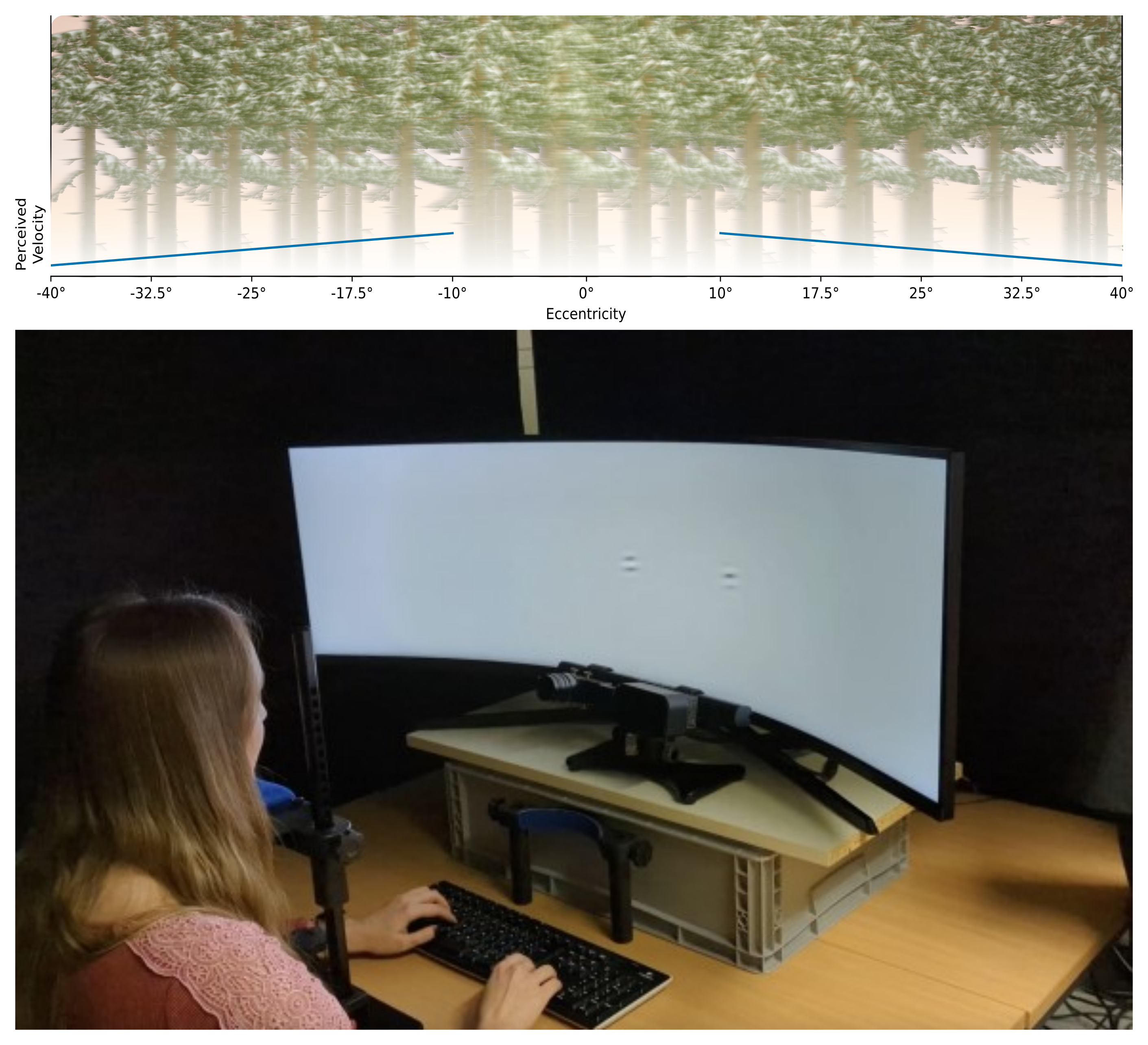

Measuring Velocity Perception Regarding Stimulus Eccentricity

ACM Symposium on Applied Perception (SAP), 2024

Computer Graphics Lab

TU Braunschweig Timon Scholz, Computer Graphics Lab

TU Braunschweig Colin Groth, Computer Graphics Lab

TU Braunschweig Susana Castillo, Computer Graphics Lab

TU Braunschweig Martin Eisemann, Computer Graphics Lab

TU Braunschweig Marcus Magnor

We investigate the influence of temporal frequency and eccentricity of a stimulus on the magnitude of perceived velocity in the periphery, building a model to predict the scaling factor for perceived velocity deviations relative to eccentricity.

Optimizing Temporal Stability in Underwater Video Tone Mapping

Proc. Vision, Modeling and Visualization (VMV), 2023

Technische Universität

Braunschweig Matthias Franz,Technische Universität

Braunschweig Bill Matthias Thang,Technische Universität

Braunschweig Pascal Sackhoff,Computer Graphics Lab

TU Braunschweig Timon Scholz, Computer Graphics Lab

TU Braunschweig Jannis Malte Möller, Computer Graphics Lab

TU Braunschweig Steve Grogorick, Computer Graphics Lab

TU Braunschweig Martin Eisemann

We present a method for temporal stabilization of depth-based underwater image tone mapping methods for application to monocular RGB video, improving color consistency across frames.

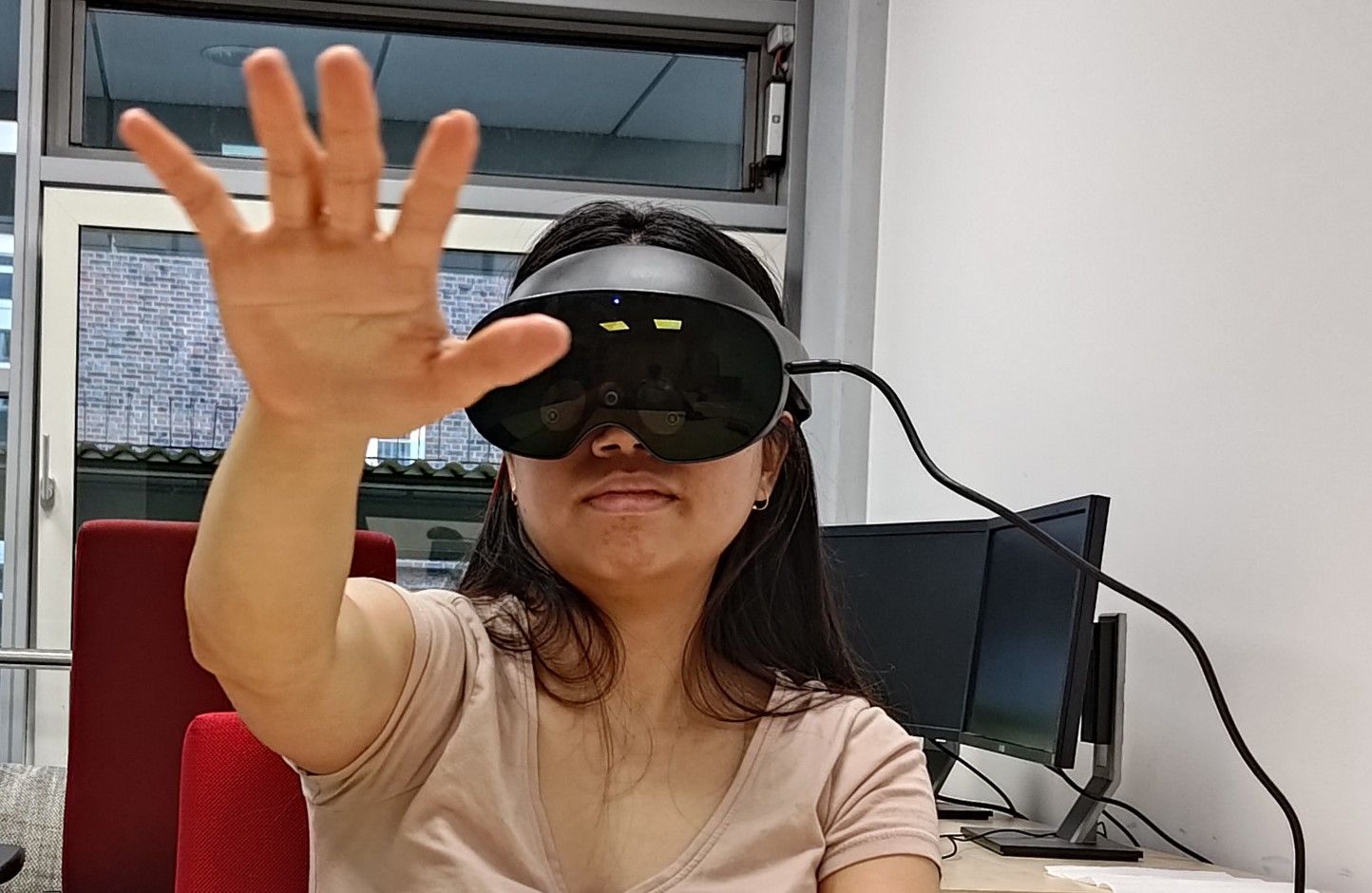

Instant Hand Redirection in Virtual Reality Through Electrical Muscle Stimulation-Triggered Eye Blinks

ACM Symposium on Virtual Reality Software and Technology (VRST), 2023

Computer Graphics Lab

TU Braunschweig Colin Groth, Computer Graphics Lab

TU Braunschweig Timon Scholz, Computer Graphics Lab

TU Braunschweig Susana Castillo, Computer Graphics Lab

TU Braunschweig Jan-Philipp Tauscher, Computer Graphics Lab

TU Braunschweig Marcus Magnor

We propose a method for instant hand redirection in VR using electrical muscle stimulation (EMS) to trigger eye blinks, enabling seamless interaction between real and virtual objects.